Details: GLM effect size indices

keywords jamovi, GLM, effect size indices, omega-squared, eta-squared, epsilon-squared

2.6.1

Introduction

Standardized Effect size indices produced by GLM module are the following:

- \(\beta\) : standardized regression coefficients

- \(\eta^2\): (semi-partial) eta-squared

- \(\eta^2\)p : partial eta-squared

- \(\omega^2\) : omega-squared

- \(\omega^2\)p : partial omega-squared

- \(\epsilon^2\) : epsilon-squared

- \(\epsilon^2\)p : partial epsilon-squared

All coefficients but the betas are computed with the approapriate function of the R package effectsize, with some adjustment.

\(\beta\) : beta

For continuous variables, it simply corresponds to the B coefficient obtained after standardizing all variables in the model. The standardization of the continuous variables is done before any transformation is applied, so if a complex model requires interaction or polynomial terms, the terms are computed after standardization, and the \(\beta\) are consistent.

For categorical variables, however, some comments are in order:

Categorical variables are not standardized in GAMLj, so the \(\beta\) should be interpreted in terms of

standardized differences in the dependent variable between the levels

contrasted by the corresponding contrast. Consider the following

example: Two groups (variable groups) of size 20 and 10

respectively, are compared on a variable Y. If one uses GAMLj default contrast coding

(simple), the B is the difference in groups means. The

\(\beta\) is the difference between the

average z-scores of the dependent variable between the two groups.

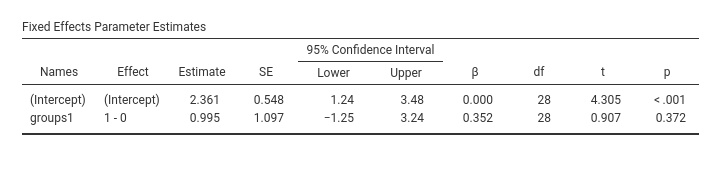

Assume these are the results:

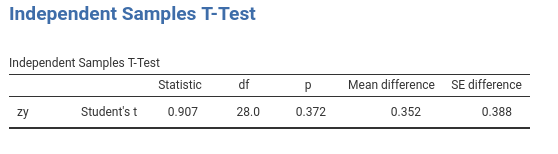

The beta is 0.352, so it means that if we compute the mean difference between groups in the standardized y, we obtain 0.352. In fact.

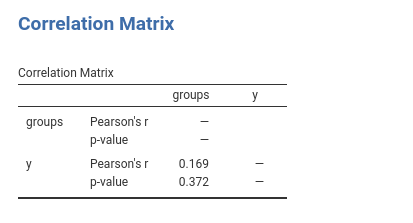

However, the \(\beta\) you obtain is not the correlation between zy and groups. The correlation is 0.169:

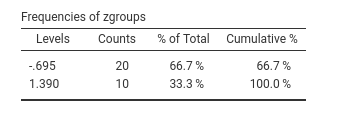

Why is there this discrepancy? Because the groups are not balanced, so when the correlation is computed, the variable groups is standardized, so the contrast coding values depend on the relative size of the groups. The actual groups coding values used by the Pearson’s correlations are the following:

Thus, the correlation corresponds to running a regression with zy as dependent variables and a continuous variable featuring either -.695 or 1.390 as values. The \(\beta\) yielded by GAMLj, instead, is the mean difference between X levels on the standardized Y. Please notice that other software may yield different \(\beta\)’s for categorical variables.

If two groups are balanced and homeschedastic, the \(\beta\) associated with a

deviation contrast corresponds to the fully standardized

coefficient.

\(\eta^2\): (semi-partial) eta-squared

This is the proportion of total variance uniquely explained by the associated effect. Being \(SS_{eff}\) the sum of squares of the effect, \(SS_{res}\) the sum of squares of the residuals or of SS error, and \(SS_{model}\) the sum of sum of squares of the whole model, we have:

\[\eta^2={{SS_{eff} \over {SS_{model}+SS_{res}}}}\]

where \(SS_{model}+SS_{res}=SS_{total}\) and \(SS_{total}=\sum(y_i-\bar{y})^2\) and \(SS_{model}=\sum(\hat{y_i}-\bar{\hat{y}})^2\).

Please notice that although the computation of the effect size

indexes and their confidence intervals is carried out employing effectsize R package,

GAMLj makes a correction to the

computation of \(\eta^2\) and of the

other non-partial indices. effectsize R package, infact,

defines the total sum of squares as \(SS_{total}^*=\sum{SS_{f}+SS_{res}}\), where

\(f\) is any effect in the model. For

balanced designs and many other models, \(SS_{total}^*=SS_{total}\), so no issue

arises. However, there are certain models in which \(SS_{total}^*\ne SS_{total}\), and so the

index looses some of its properties when computed based on \(SS_{total}^*\). GAMLj operates a correction such that all the

non-partial indeces are always computed based on \(SS_{total}=SS_{model}+SS_{res}\).

With the correction, one property \(\eta^2\) retains even when \(SS_{total}^*\ne SS_{total}\) is that \(\eta^2=r_{sp}^2\), where \(r_{sp}\) is the semi-partial correlation

(Cohen, West, and

Aiken 2014). We can use the exercise dataset

from (Cohen,

West, and Aiken 2014) to see this in practice (refer to

Multiple regression, moderated regression,

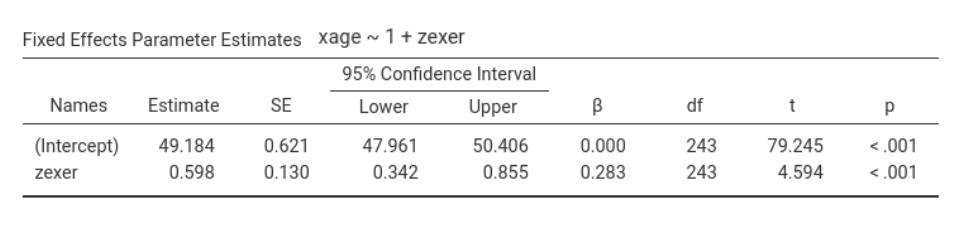

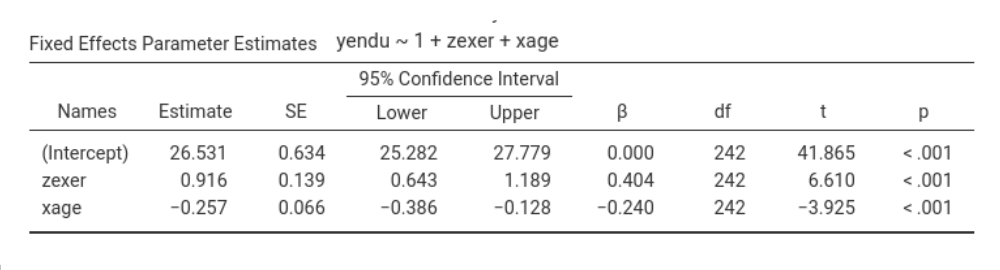

and simple slopes for a complete analysis). If we run a multiple

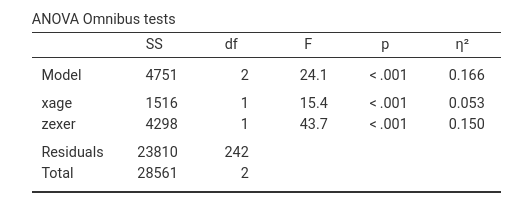

regression yendu~xage+zexer and ask for the \(\eta^2\)’s, we obtain the following

results:

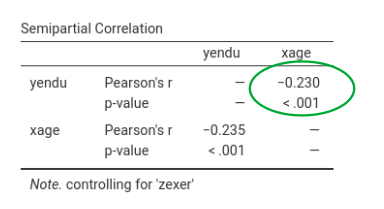

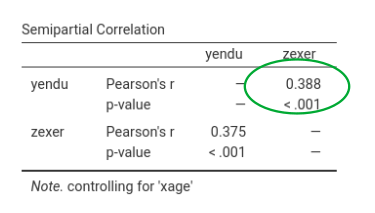

Going in jamovi, Regression->Partial correlation we can compute the semi-partial correlation of each IV coviariating the other. This gives:

Squaring it gives \(r_{yx.z}^2=-0.230^2=0.0529\), which is equal to the corrisponding \(\eta^2=.053\).

Squaring the second \(r_{sp}^2\) gives \(r_{yz.x}^2=0.388^2=0.1504664\), considering rounding, which is equal to the corrisponding \(\eta^2=.150\).

If we used \(SS_{total}^*=\sum{SS_{f}}+SS_{res}\), results would be:

- \(SS_{total}^*=1516+4298+23810=29624\)

- \(SS_{yx.z}^*=1516/29624 =0.0511747\)

- \(SS_{yz.x}^*=4298/29624 =0.1450851\)

that are clearly not corresponding to the \(r_{sp}^2\), as they should be. We should note, however, that the two methods of estimation usually give very similar results, even when \(SS_{total}^* \ne SS_{total}\) and exactly the same results when \(SS_{total}^*=SS_{total}\).

The same reasoning holds for all the non-partial indices.

For many people, the fact that \(SS_{model}^* \ne SS_{model}\) may come as a surprise. Maybe because in the ANOVA tradition, with balanced designs, \(\sum{SS_{f}}\) is always equal to \(SS_{model}\), or maybe because it would be nicer if it was always like in the ANOVA. Since we have to accept that in the GLM \(\sum{SS_{f}}\) is not necessarily equal to \(SS_{model}\), let us see when this happens.

There are two cases: The easiest to understand is when \(\sum{SS_{f}} \lt SS_{model}\). This case happens when the independent variables are positively (or all negatively) correlated so each variable explains a unique part of the variance, but the model sum of square involves also some shared variance, which ends up in \(SS_{model}\) but not in \(\sum{SS_{f}}\).

A little trickier is the case when \(\sum{SS_{f}} \gt SS_{model}\), because it seems strange that the sum of effects is larger than the overall combined effect.

Consider a regression \(y=a+b_{yx.z}x+b_{yz.x}z\). Recall the the \(SS_{yx.z}\) (\(x\) explains \(y\) keeping constant \(z\)) is computed as \(SS_{model}-SS_{yz}\), where \(SS_{yz}\) is the sum of squares explained by \(z\) without \(x\) in the model, and \(SS_{yz.x}=SS_{model}-SS_{yx}\). It follows that the \(\sum{SS_f}=2\cdot SS_{model}-SS_{yx}-SS_{yz}\) is larger than \(SS_{model}\) when \(SS_{model} > SS_{yx}+SS_{yz}\). This necessarily means that at least one variable explains more variance while keeping constant the other than alone.

Indeed, in the example above about exercising, the SS of age alone is 452, and exer alone is 3234, which sum to 3686, less than 4751, the multiple regression model SS.

The question is now: when does this happen? Well, it happens when \(B_{yz}\), the coefficient associated with \(z\) in simple regression, is smaller (in absolute value) than the partial coefficient of \(B_{yz.x}\) of a multiple regression. Because \(B_{yz.x}=B_{yz} - B_{yx.z}\cdot B_{xz}\), this happens when \(B_{yz}\) and \(B_{yx.z}\cdot B_{xz}\) have different signs: therefore, we have a suppression effect!

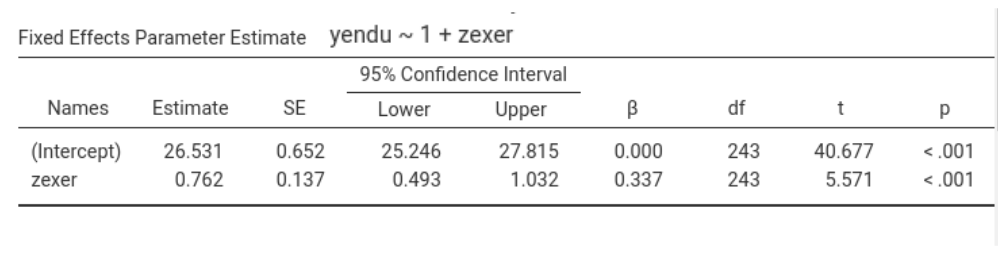

In the example above, focusing on exer (the z variable), we have \(B_{yz.x}=.916\), \(B_{yx.z}=-.257\) and \(B_{xz}=.598\), thus \(B_{yx.z}\cdot B_{xz}=-.257*.598=-0.153686\), which has a different sign than \(B_{yz}=.762\). Thus, the effect of exercising is stronger when keeping constant age than alone: age suppresses the effect of exercising on endurance.

\(\eta^2\)p : partial eta-squared

This is the proportion of partial variance uniquely explained by the associated effect. That is, the variance uniquely explained by the effect expressed as the proportion of variance not explained by the other effects. Here the variance explained by the other effects in the model is completely partialed out. Its formula is:

\[\eta^2p={{SS_{eff} \over {SS_{eff}+SS_{res}}}}\]

clearly, if there is only one independent variable, \(\eta^2=\eta^2p\)

\(\omega^2\) : omega-squared

This is the expected value in the population of the proportion of variance uniquely explained by the associated effect. In other words, it is the unbiased version of \(\eta^2\). There are different formulas to visualize its computation, here is one. If \(df_{res}\) are the degrees of freedon of the residual variance, \(df_{eff}\) are the degrees of freedom of the effect, we have:

\[\omega^2={{SS_{eff}-SS_{res} \cdot ({df_{eff}/df_{res}) \cdot }}\over{ SS_{model}+SS_{res}(df_{res}+1)/df_{res}}}\]

It’s clear that omega is similat to \(\eta^2\), but applies a correction for the denominator.

\(\omega^2\)p : partial omega-squared

This is the expected value in the population of the proportion of partial variance uniquely explained by the associated effect. In other words,it is the unbiased version of \(\eta^2p\). With N being the sample size, We have:

\[\omega^2p={{SS_{eff}-SS_{res} \cdot ({df_{eff}/df_{res}) \cdot }}\over{ SS_{eff}+SS_{res} \cdot [{(N-df_{eff})/df_{res}}] }}\]

It’s clear that omega is similat to \(\eta^2p\), but applies a correction for the degress of freedom. In fact, as N increases, the two indices converge.

\(\epsilon^2\)p : epsilon-squared

Epsilon-squared is another correction of \(\eta^2\), but the correction involves only the estimation of the sum of squares of the effect, not the partial variance on which the effect is compared

\[\epsilon^2={{SS_{eff}-SS_{res} \cdot ({df_{eff}/df_{res}) \cdot }}\over{ SS_{model}+SS_{res}}}\]

\(\epsilon^2\)p : partial epsilon-squared

As for the non-partial Epsilon, the partial Epsilon-squared is a correction of \(\eta^2p\), but the correction involves only the estimation of the sum of squares of the effect, not the partial variance on which the effect is compared

\[\epsilon^2p={{SS_{eff}-SS_{res} \cdot ({df_{eff}/df_{res}) \cdot }}\over{ SS_{eff}+SS_{res}}}\]

Simple Effects

From version 2.6.1 on, all the effect size indices are available also

for the simple effects. To compute them, GAMLj extracts the SS of the simple effect

from R emmeans F-tests. The SS residuals and SS model is

extracted from the model summary, given that both SS do not change when

simple effects are computed. Then the indices are computed using the

previously described formulas.

In particular, if the simple effect is \(se\): \[SS_{res}=\sigma^2\cdot df_{res}\]

where \(\sigma\) is extracted as

sigma(model).

\[SS_{model}={{F_{model}\cdot df_{model}} \cdot {SS_{res} \over {df_{res}}}}\]

and

\[SS_{se}={{F_{se}\cdot df_{se}} \cdot {SS_{res} \over {df_{res}}}}\]

Confidence intervals

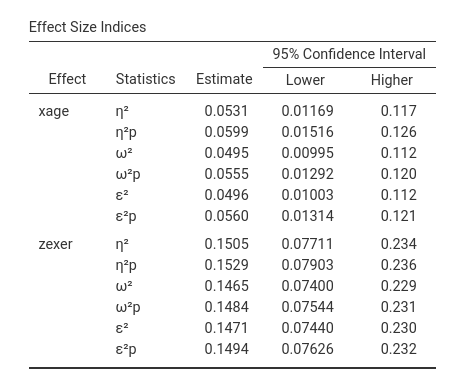

In option tab Options it is possible to ask additional

tables for the effect size indices, containing the effect size indices

and their confidence intervals (here an example with the

exercise dataset)

Details for the confidence intervals computation can be found in Ben-Shachar, Makowski & Lüdecke (2020). Compute and interpret indices of effect size. CRAN

Comments?

Got comments, issues or spotted a bug? Please open an issue on GAMLj at github or send me an email